“you cannot step into the same river, for fresh waters are ever flowing”1

Entropy is a fundamental concept representing change and flow in the universe. It reflects the transformation of usable energy and the complexity of information uncertainty navigated by life-forms.

In a recent article on inequality I incorporated the concept of entropy, so it seemed worthwhile explaining this much misunderstood concept:

This article2 explores how living organisms harness usable energy for their use (utility) amidst the overarching trend toward increased informational complexity.

Table of Contents

- What is Entropy?

- Utility & Usefulness

- Energy Deltas

- Will-o’-the-Wisp Nature

- Entropy Cycles

- The Seeming Paradox of A Structured Star

- Entropy and Information

- Rivers And Seas of Entropy

- The Impossibility Of Knowing The Future

- Information Uncertainty

- Footnotes

What is Entropy?

Let us start by being clear about what entropy is not:

- Entropy is not quite “decay”3, and is not really “disorder” or “chaos”.

- Entropy is also not quite ‘time”4 – though it is more closely related to it given it is about the flux and flow of energy and matter.

Ok so what is Entropy?

Entropy is the signature, the pattern or mathematical description of movement and change (hence its strong correlation with time). It describes and drives the transformation of matter through energetic interactions. It gives us this extraordinary universe where things happen, and at a very fine grained scale unpredictably so. It maximises possibilities – the ultimate engine of creativity and change.

Life exists by making use of usable energy (known as exergy) and this use is offset by a corresponding export of waste by life-forms into the surrounding environment (though here we also need to be careful since one life-form’s waste is another life-form’s usable energy!).

“Entropy is the loss of energy available to do work. Another form of the second law of thermodynamics states that the total entropy of a system either increases or remains constant; it never decreases. Entropy is zero in a reversible process; it increases in an irreversible process.”5

Utility & Usefulness

One general description of entropy might be as the lack or loss of useful energy, or useful information about the state of things, with which to do ‘useful’ work (utility). Utility is the capacity to do non-random (potentially useful) work with available free energy.

We tend to think of “utility” as a subjective economic term —a measure of how much we desire, say, a new car or a smart phone. But if we peel back our anthropocentric bias, utility reveals itself as a more fundamental property: it is the ability to use free energy to build, maintain and resist (inertia) the forces of destruction and unpredictability.

In a universe governed by the Second Law of Thermodynamics, where every natural process tends toward equilibrium with everything else (which ultimately means death), “usefulness” is the rare ability to swim upstream. It is the capacity to harness free energy (like sunlight) and use it to create order, structure, knowledge and complexity.

The word ‘entropy’ derives from the Greek word for ‘transformation’. The term was coined by Rudolf Clausius in 1865, combining the Greek words en (in) and tropē (transformation/turning). It was deliberately constructed to resemble “energy” while conveying the concept of transformation. This is very apt given the way in which entropy and energy are so closely intertwined, and that energy changes or transforms in its movements but is not usually destroyed.6 Many readers who have considered this concept before are likely (as I) to be perplexed by the concept of entropy.

- Why is the notion so hard to explain and understand, and so prone to misunderstanding?

- Is entropy a description of physical disorder and deconstruction?

- Are we really measuring indeterminacy and randomness?

- Maybe it is something else that we can’t quite explain?

“I refuse to answer that question

on the grounds that I don’t know the answer”7

(Douglas Adams)

Energy Deltas

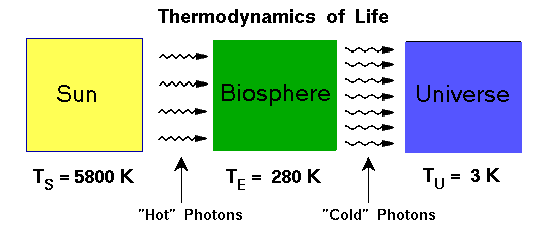

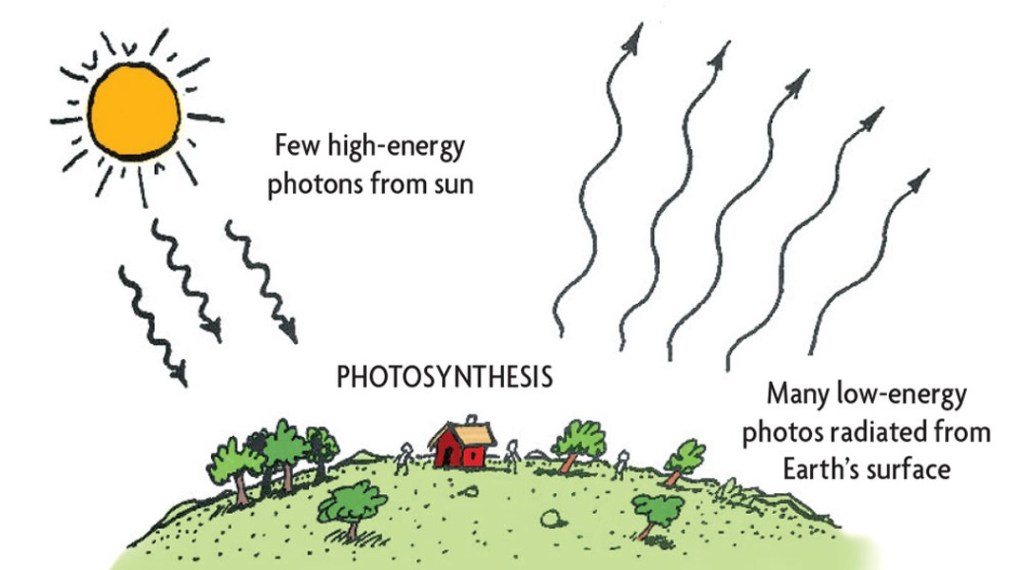

In the case of the Earth, we are told that this life process involves all life-forms (and all matter within the biosphere used to build living organisms) taking in energy from the sun at a higher frequency and releasing the same amount of energy back into the environment and the universe at a lower frequency.

The usefulness of energy is therefore related to the usable gradients, or deltas, between different types of energy. In other words, where heat energy is concerned, differentiation is life and uniformity is death.

Natural processes are subject to the second law of thermodynamics (which is either a law or a statistically accurate description):

“every natural process has in some sense a preferred direction of action. For example, the flow of heat occurs naturally from hotter to colder bodies…”9

Most of the natural processes we are aware of are not reversible (e.g., the dead do not come back to life and broken eggs stay broken).10

In respect of life, the applicability of thermodynamic theory can be summarised as follows:

“There are two principal ways of formulating thermodynamics, (a) through passages from one state of thermodynamic equilibrium to another, and (b) through cyclic processes, by which the system is left unchanged, while the total entropy of the surroundings is increased. These two ways help to understand the processes of life.”11

Sir Roger Penrose has written extensively about entropy, more recently in his quest to ascertain where our universe comes from, where it may go to and the possibility it has a cyclical (natural) quality.

“Usually when we think of a ‘law of physics’ we think of some assertion of equality between two different things … However, the Second Law of thermodynamics is not an equality, but an inequality, asserting merely that a certain quantity referred to as the entropy of an isolated system – which is a measure of the system’s disorder, or ‘randomness’ – is greater (or at least not smaller) at later times than it was at earlier times.”12

As Sir Penrose points out, the more one looks, the more that it appears there is something tricksy about the second law of thermodynamics.

“Going along with this apparent weakness of statement, we shall find that there is also certain vagueness or subjectivity about the very definition of the entropy of a general system.”13

Will-o’-the-Wisp Nature

This concept of entropy has an elusive will-o’-the-wisp nature which makes it very hard to pin down.

This may be because of its asymmetric nature in time, or something to do with a certain arbitrariness in our definitions or application. Perhaps the universe does not have increasing entropy everywhere, and so it is not an invariant law but a property of our local space-time (galaxy, super cluster etc.). Alternatively, and seemingly more likely, it could be entirely a matter of perspective as to whether initial low entropy is a particularly relevant property of the universe.

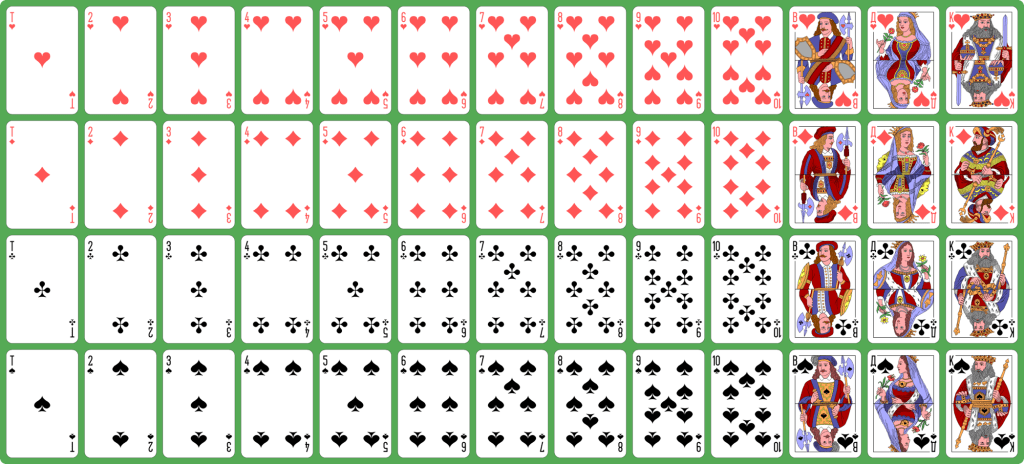

Consider a pack of cards: if they are randomly ordered (shuffled) and then dealt out, what are the chances that 26 red cards would be dealt out first (in any numerical or face-card order) consecutively, and then 26 black (in any order)?

Very … very … very low (1 in 446,726,754,173,622).15

What extraordinary anti or negative entropy such a starting position would represent.

Yet this highly improbable starting position for a pack of cards would be of absolutely no use or interest to a beetle, a blue whale or some humans – though it might be interesting to a computer scientist, a mathematician, someone who likes to play cards or anyone who understands how unlikely it is.

The simple fact remains that a shuffled pack of cards consisting of 26 red cards and then 26 black cards as its starting position is not in any universal sense more useful than a mixed pack (the statistically more likely starting position), unless you are a life-form that is betting on the colour of the next cards.

We have evolved within space-time and in a way we are able to ask questions such as how life-forms arose and evolve and, as Carlo Rovelli16 explains, the answers to those questions are very important to us. Rovelli’s approach to answering the question of why we have low initial conditions of entropy in the universe makes for an interesting interpretation of how to think about entropy; contrast this with the more formal ideas of gravitational degrees of freedom explored by Sir Roger Penrose.17 As life-forms, we rely very much on the ability to access free energy from the flow of heat from hot bodies to colder bodies and surrounding space. All life as we know it arises from this process.

Rovelli’s view is that the statistical nature of entropy as a property of the universe may only be of interest to a life-form looking at the universe from its perspective as a life-form. From that perspective, the improbably low entropic configuration (starting-point) is very useful – the same way a shuffled deck with an unusual initial configuration of red and black cards in order is for a gambler.

Life arises and evolves from the interaction of positive information (always changing but potentially knowable facts or factual backgrounds) and negative capability and freedom of action (statistical deviance from the norm). If everything could be predetermined (known beforehand) then there would be no need for negative capability or freedom to deviate (including with intelligence).

Deviancy is an elegant and natural algorithm for a world in which, in practice, it is not possible to know all facts without doing work on each problem, or even to know which solutions will turn out to be better or worse than other solutions at solving problems in the specific factual landscape that applies. In an infinite and changing universe, actual work to solve problems will always be required. If we imagine a universe where we could get answers to all questions without doing work, then any such universe will not give rise to the need for intelligent life-forms.

Entropy Cycles

We are informed that the Sun is negatively entropic, and the Earth is positively entropic. However, we must be careful with these terms. It appears that the states and processes of high, low and negative entropy act as flows and re-cycles (albeit, overall, the process is running down towards maximum entropy and warm bodies do not get warmer when in contact with colder bodies).

The Sun’s useful energy is finite and will run out eventually, and so the overall flow of entropy is still increasing. The general and greater flow is still from the lower-entropy and higher-energy state (the smaller, concentrated, hot body – the Sun) to the higher-entropy and lower-energy state (the colder, more distributed background of space). Energy is largely conserved throughout the whole process18but entropy increases as energy is ultimately becoming less useful for life-forms as the flow of solar energy continues its journey in space-time away from the Sun.

The Sun is considered a source of negative entropy, from the perspective of life-forms on Earth, even though it creates entropy in its formation and fusion processes. The process of fusing atoms at the heart of each star converts atoms – matter – into other types of heavier matter and the difference is released as uniformly radiated heat and light, i.e., pure energy.

Ilya Prigogine won a Nobel Prize for his work on what he called dissipative structures. Prigogine showed that in systems far from equilibrium—like a hot primordial soup of molecules or a biosphere under a star—matter can spontaneously self-organise. These structures maintain their beautiful internal order precisely by dissipating energy and generating entropy at a furious rate. Life is the ultimate dissipative structure; we are temporarily stable structures and patterns within a never ending flow of energy.

Through the process of fusion, the Sun creates an extraordinary level of energy that is significantly different to the background cosmic temperature and local space-time temperature. The difference, or delta, between the energy from the Sun and the energy arriving on Earth from the rest of the universe gives rise to usable energy on Earth for life-forms (which ultimately leaks out into the universe, thus preventing the overheating of Earth).19

When we speak about life processes being negatively entropic, we do not mean in exactly the same way that a star is. We are referring to the capacity of life-forms to consume and recycle the free energy from a star to create unique information, to do work, to recycle matter. To recycle death.

Looked at from a higher dimension or systems level, life-forms are really agents of entropy in their use of free energy to create even more potential information, matter and energy microstates within their environment and beyond their planets. Life is entropic in that it tends towards generating a maximum variety of life-forms (the ecological configuration space populated by different species).

Life-forms use information and information structures to help energy flow and dissipate faster, we speed up processes of chemical equilibrium.20 Life-forms literally help carry and disperse the cosmic fire.

Whilst Schrödinger focuses on what life takes in (useful energy), Rovelli focuses on what life does (accelerates complexity, information uncertainty and creates waste to maintain their own life-form).

“There is a common idea that a living organism is a sort of fight against entropy: it keeps entropy locally low. I think that this common idea is wrong and misleading. Rather, a living organism is a place where entropy grows particularly fast, like a burning fire. Life is a channel for entropy to grow, not a way to keep it low.”21

“You rise beautiful from the horizon on heaven,

living disk, origin of life.

You are arisen from the horizon,

you have filled every land with your beauty.

You are fine, great, radiant, lofty over and above every land.

Your rays bind the lands to the limit of all you have made,

you are the sun, you have reached their limits.

You bind them (for) your beloved son.

You are distant, but your rays are on earth,

You are in their sight, but your movements are hidden.”22

Like the Sun in its burning, life processes create entropy, but the entropy created is more like a waste product to life-forms. So, life processes borrow usable energy from the Sun for some time and use it within life-forms that require it to stay alive and to perpetuate the lifecycle (to create new life-forms that carry the fire). Pausing there for a moment, the astute reader may have noticed that stars, like the Sun, appear to be acting contrary to the second law of thermodynamics.

If it is improbable for sand on a beach to spontaneously rearrange itself into a sandcastle (or a sandy Taj Mahal), how can randomly distributed hydrogen atoms in a vast cloud in space arrange themselves into such tightly structured and useful stars?

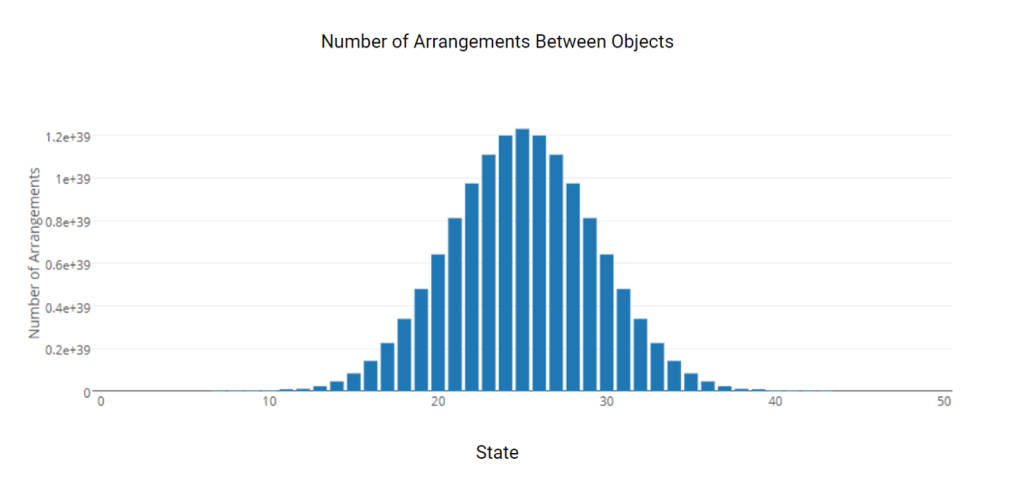

“This histogram illustrates an important principle: As you add more states to your system, it becomes more likely that the arrangement you observe is the most likely arrangement. For example with just 50 states, the odds of observing the least likely arrangement is 1 in 132,931,168,175. Now imagine the sand castle has hundreds of millions23of grains of sand.”24

If entropy tends always to increase, I wondered whether stars were an example of an eddy in the entropy flow (a temporary reversal), where entropy decreases for a short time even though it is still ultimately tending to maximum entropy.

The Seeming Paradox of A Structured Star

At first glance, stars seem to violate the second law of thermodynamics. The law states that entropy tends to increase, yet a star is a highly structured, organised object formed from a chaotic, dispersed cloud of hydrogen gas.

How can a random cloud spontaneously arrange itself into something as complex and powerful as our Sun?

It looks like a reversal of the natural order—a sandy beach suddenly assembling itself into a castle.

The answer lies in understanding that entropy is not a measure of “order”.

While the gas molecules in a star are indeed packed tighter together (more ordered or structured in physical space), their velocity and momentum are much more unpredictable.

Entropy is a measure of the number of possible “microstates”—the different ways a system can be arranged while looking the same from the outside. When a gas cloud collapses into a star, the molecules speed up drastically. This increase in speed means they are dispersed across a much larger range of momentum values. This “phase space”—which accounts for both position and momentum—is where the real entropy count happens.

So, while the star looks tidy and organised to our eyes, in the hidden mathematical dimensions of phase space, it is actually more distributed in phase space than the calmer cloud it was born from. The star is not an eddy reversing the flow of entropy; it is a roaring engine increasing it. This is the key to understanding life: we are not really fighting entropy; we are surfing its waves.

“This is another common source of confusion, because a condensed cloud seems more ‘ordered’ than a dispersed one. It isn’t, because the speed of the molecules of a dispersed cloud are all small (in an ordered manner), while, when the cloud condenses, the speeds of the molecules increase and spread in phase space. The molecules concentrate in physical space but disperse in phase space, which is the relevant one.”25

In addition, the Sun creates new matter, like helium and heavier atoms, and unlocks energy trapped in hydrogen and other atoms in the process. The unlocked energy further interacts with the existing matter in the star (heating it up). This all adds to the complexity of the number of possible microstates of all matter within a star system compared to a colder and widely distributed but more leisurely cloud of hydrogen atoms.

For these reasons, it has been suggested that black holes are sources of maximum entropy since there appears to be no way of knowing or estimating the number of possible microstates of any matter that falls through the event horizon, nor is there any way to get useful energy back from it.26 There appears to be no further extractable specific or probabilistic information available about that matter.27 In order to maintain the cosmic accounting balance, black holes must be sources of great entropy, at least as perceived from this universe.

Entropy and Information

“Going to see the river man

Going to tell him all I can

About the ban

On feeling free.

If he tells me all he knows

About the way his river flows

I don’t suppose

It’s meant for me.”28

(Nick Drake)

Rivers And Seas of Entropy

Despite strenuous efforts, the science and descriptions of entropy continue to be very difficult for non-scientists (like me) to understand – yet an attempt to ensure that we squarely understand what entropy is will be helpful. It is some comfort that many eminent scientists express the difficulties faced in making sense of ever-elusive entropy. Perhaps it is because entropy is not a thing or a force, it is a statistical way of looking at the universe and the exchanges between actions and information.

Taking account of all the previous information about entropy and energy, let us put the concept of entropy into an analogous and practical framework to ensure we are comfortable with it.

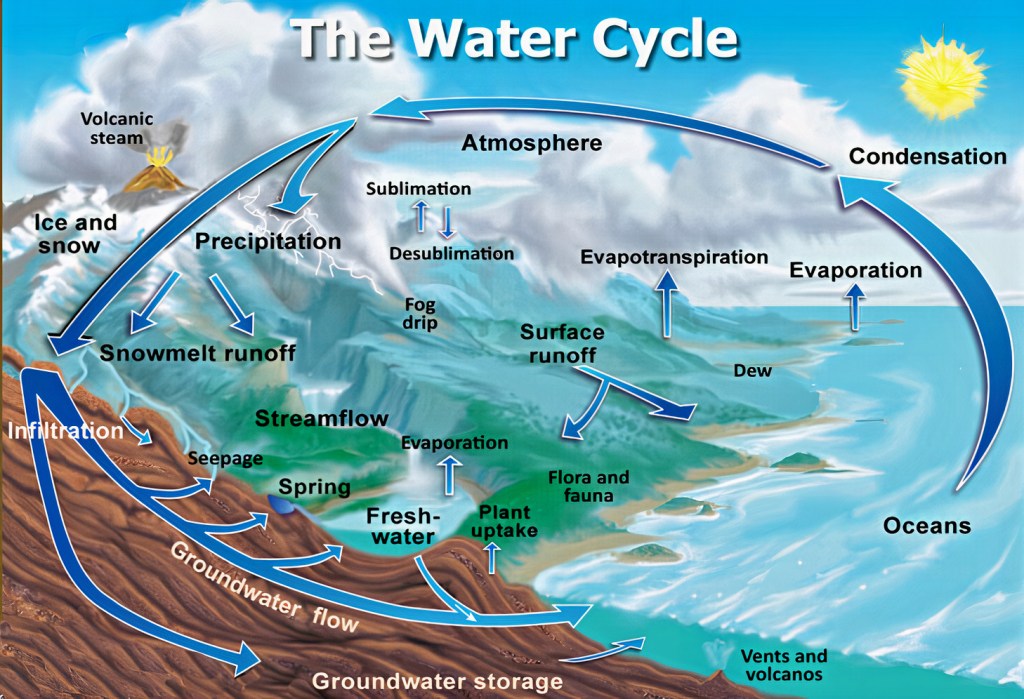

- The entropic process is in some ways similar to the way in which energy changes as it interacts with space-time and matter, or how a river changes speed, meanders and even turns back upstream when it eddies on its way to the sea.

- Entropy is like the journey of rain and a flowing river, where the higher water ‘wants’ to fall (due to gravity) to a lower place, which happens by way of conversion of that (potential)29 gravitational energy into kinetic energy.

- We wish to track (using mathematical guesswork) the likely movement of ten molecules of H2O from a starting point at the highest point of the river to the lowest point.

- The flow of water is always tending towards the sea or other lower places, but the process is not instantaneous and is not at a uniform rate.

- The impact of time and differences in flow rates give us areas or fields (such as pools, floodplains and estuaries) where the water flow slows or stops. Water can even temporarily flow upstream a little in river eddies.

- Some of this water flow eventually reaches the sea or ocean.

Now we do not wish to know the exact location of each molecule (entropy statistical tools will not assist with that).

We do wish to know the probability of each molecule being in a specific location on Earth but without knowing each molecule’s actual location (it’s microstate).

We divide the Earth into 1m2 parcels, in which we seek the probability of finding our specific water molecules. When the molecules started their journey, they were in a state in which it was relatively easier for us to assess their probable location. The number of molecules at the source of all rivers and higher-water-catchment areas is much smaller than all the water elsewhere. The geographic spread of locations where rain falls the most is also much smaller than where water finishes its process of falling down. So, we have fewer molecules (in which our ten can be ‘hidden’) and a smaller number of parcels at the beginning of our probability estimate (less dispersed).

Very interesting things are possible with life-forms within certain parts of the flow,30 notwithstanding that the flow is still tending towards the higher-entropy state. This flow gives life-forms useful energy, even though, like all natural processes, it is not truly reversible. We can describe the movement of those ten molecules from the start to the end of the falling down process as one of shedding usable (useful) energy, which is why life-forms can take advantage of this flow.31 In the process of doing so, the molecules become more difficult to locate. The measure of entropy here is a mathematical equation that seeks to specify the probability of each molecule being in any microstate at different times.

Using this analogy, it becomes obvious that – in the absence of perfect, continuous and free knowledge about the location of every molecule of H2O on Earth – the ability to guess the number of possible locations (the microstates) for each H2O molecule becomes increasingly more difficult (costly) as they shed flow energy and disperse. The calculation work required is also a use of energy that creates entropy (heat from computing). Entropy here is the probable number of microstates of the molecules at each stage of the process.32 It will therefore increase as the energy of each H2O molecule is transformed and shared (or shed) with other matter.

For the purposes of water and other matter, the Earth largely acts as a closed system (exchanges with the surrounding space are primarily energy exchanges) and so the process can give rise to a recycling of water and the molecules can take up a new higher-energy (lower-entropy) configuration and start the process again. The water cycle process is not truly reversible (i.e., it is not the same water molecules spontaneously flowing from the sea up to the mountains and then into the sky), yet the power of the Sun gives us something very close to reversibility in practice: the natural water cycle. The additional energy required to move the water back up (e.g., from the ocean, sea or lake) to the higher altitude point to restart the flow is a product of the Sun’s continuous supply of free useful energy.

The Impossibility Of Knowing The Future

We must now return briefly to our deviant pack of cards. As discussed, the probability of having 26 red cards dealt in consecutive order from a well-shuffled pack is extraordinarily low. Note now what happens after red card 26 is dealt – all of that initial unlikeliness is now balanced by a near-absolute certainty (in probability terms) that the next 26 cards will be black.

The total number of microstates (if the colour black is the only quality in question) for the remaining cards is now just 1 (i.e., there is no information uncertainty).

The initial improbability of the system is mirrored by the extremely unusual amount of information those initial conditions give us about the nature or microstates of the remaining cards.

Is the extreme improbability of 26 red cards being dealt first just another demonstration of the universal law that makes it near impossible to know the future?

The universe appears to go to great lengths in its laws to avoid absolute pre-determinacy at all scales.

In the example of the first 26 cards being red, whatever configuration the next 26 cards are in, we know – without doing any more work – that each card dealt will be black. There is zero entropy, in information terms, within the remaining pack in respect of the quality or value ‘black’. This move from very high initial uncertainty to absolute certainty of the colour of the cards as they are dealt gives a simple general sense of entropy and negative entropy in information terms.

Information Uncertainty

The concept of information entropy was pioneered in a 1948 paper by Claude Shannon.33 Shannon realised that entropy can also be described in information terms and not just in respect of heat dynamics. Indeed, he showed that information and entropy are directly correlated and can be said to be different descriptions of the same thing. The more certainty we have about any event (the numbers in a lottery draw, the colour of balls being pulled from a jar or the colour of cards to be dealt in a pack) is directly inversely proportional to the entropy (since certainty about the actual microstates within a macrostate equals maximum information available).34

“Memory and agency utilise the information stored in low entropy and translate it into information readable in the macroscopic world … Traces of the past and decisions by agents … are major sources of everything we call information. In both cases, information is created, … at the expenses of low entropy, in accordance with the second principle. In a fully thermalised situation, there is no space for memories or for agents.”35

(Carlo Rovelli)

The ability to undertake unusual activity requires information and consciousness (including memory and culture) or coded storage mechanism that give life-forms a relative perpetuation, procreation or survival advantage in their uniquely challenging environments. The reason for the information correspondence is that entropy is a probability analysis, and it is not an attempt to define an actual state of play. Certainty is a situation of no probabilities. Uncertainty can be defined as a probabilistic assessment of our lack of confidence of what will happen next in any event. In addition, the generation of information about the past and potential future events is a consequence of ever-increasing entropy.

Why might this be relevant to freedom of action and the ethics of life for life-forms?

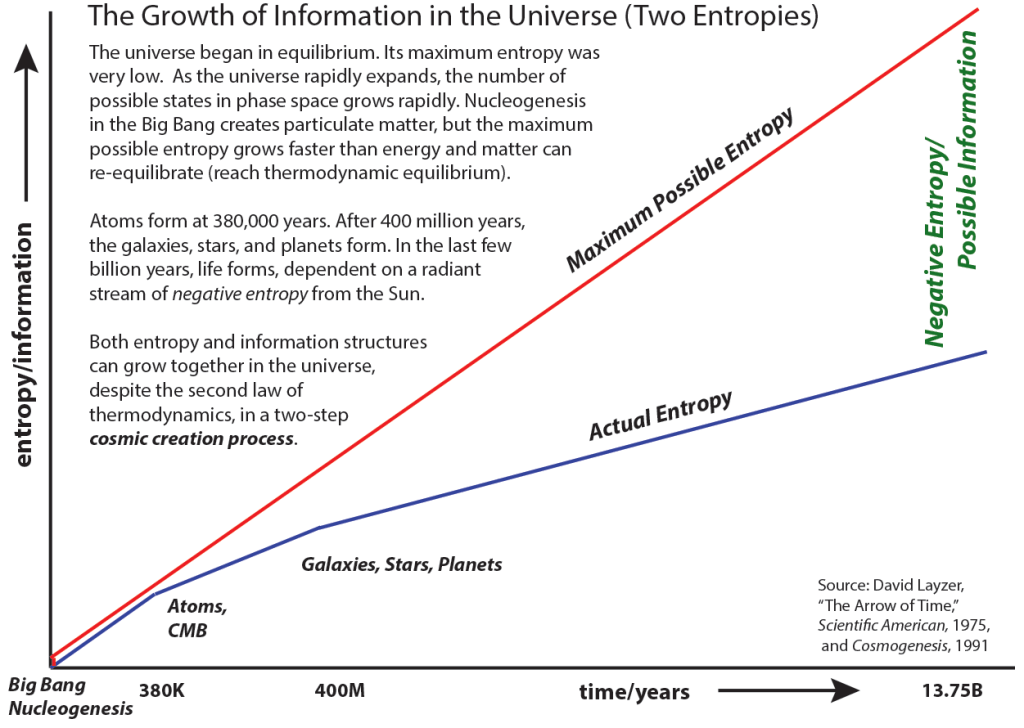

“David Layzer … made it clear that in an expanding universe the entropy would increase, as required by the second law of thermodynamics, but that the maximum possible entropy of the universe might increase faster than the actual entropy increase. This would leave room for an increase of order or information at the same time the entropy is increasing!”36

Life is an emergent complex property that can arise, within a window of opportunity, due to the interplay between different types and locations of energy and matter.

Life-forms live in the delta between the difficulty of knowing or doing anything useful and an increase in available information we have about this condition. At first this seems counter-intuitive since the aggregate information entropy must also increase with general cosmic entropy (thermodynamic equilibrium) but, as with life-forms’ use of usable energy in this overall process, information about the past is useful and we can make measurements to guess at or even mould likely futures.

‘Reality’ unfolds with increasing potential information and memory about what has happened and increased uncertainty about what is happening or going to happen (an increase in aggregate microstate uncertainty).37

Neuroscientist Karl Friston proposes that all biological systems—from single cells to human brains— are driven by a single imperative: to minimise surprise. To stay alive, an organism must maintain its internal order against a chaotic world. It does this by constantly predicting what will happen next and acting to fulfil those predictions. In Friston’s view, a living thing is a self-fulfilling prophecy, a statistical model that acts to ensure it doesn’t encounter the ultimate surprise: death.

The current success of life-forms is happening within a universe that appears to ultimately be headed towards a condition of maximum entropy (which is heat death – extinction for all living things), where there is likely to be no more useful matter, energy or information from the perspective of any life-forms that are still alive in this universe through the next 10106 years or so.38

Don’t despair, that’s a lot of time – and life may yet even evolve a form that can jump to another universe or dimension.

So how does life create biological ‘engines’ that can manage this universe of complexity?

We will explore this in Part 2….

Footnotes

- Heraclitus: “Ποταμοῖσι τοῖσιν αὐτοῖσιν ἐμβαίνουσιν ἕτερα καὶ ἕτερα ὕδατα ἐπιρρεῖ” –(You cannot step into the same river; for fresh waters are ever flowing in upon you.), Wikipedia. ↩︎

- This is based on a chapter of a book I wrote: ‘Ethics of Life’ ↩︎

- Whilst entropy describes the statistical flattening out of energy at the macroscopic scale, the actual mechanism of decay at the atomic level is governed by quantum radioactivity. ↩︎

- Although the uncertainty about what will happen and the continuous unpredictability of the movement of matter and energy is what provides us with the subjective feeling of time flowing. See, for example, Carlo Rovelli, “Forget Time“, 2008. and Youtube: ‘Time Is an Illusion‘ ↩︎

- Lumen Learning: “Entropy and the Second Law of Thermodynamics: Disorder and the Unavailability of Energy”. ↩︎

- In fact, this is a description of the law of conservation of energy which the first law of thermodynamics is a variation of. Some contend that it may not hold true once general relativity is included. Sean Carroll’s work explains that in an expanding universe with general relativity, energy conservation becomes problematic because there is no unique way to define total energy. ↩︎

- BBC Radio 4, “42 Douglas Adams quotes to live by” ↩︎

- Marek Roland-Mieszkowski, “Life on Earth: flow of Energy and Entropy”, Digital Recordings, 1994. ↩︎

- Massachusetts Institute of Technology, “Axiomatic Statements of the Laws of Thermodynamics”. ↩︎

- However, animals like the immortal jellyfish do something interesting in resetting their biological clocks. ↩︎

- Wikipedia, “Second law of thermodynamics”. ↩︎

- Sir Roger Penrose, Cycles of Time, 2010. ↩︎

- Ibid. ↩︎

- Дмитрий Фомин (Dmitry Fomin), “Playing Cards”, Wikipedia,2014, public domain. ↩︎

- 1 in 447 trillion. Thanks to “Picking Probability Calculator (Odds, Card, Marble): Online”, Quora and WolframAlpha. ↩︎

- Carlo Rovelli, The Order of Time, 2017. ↩︎

- See also Carlo Rovelli, “[1812.03578] Where was past low-entropy?”, Cornell University, 9 December 2018. ↩︎

- Leaving aside the potential impact of general relativity and an expanding universe, see, e.g., Sean Carroll, “Energy Is Not Conserved”, Discover, 22 February 2010. ↩︎

- Image: Barry Evans, “Fighting Entropy”, North Coast Journal, 2018. ↩︎

- Ville R.I Kaila and Arto Annila, “Natural selection for least action”, The Royal Society, 22 July 2008. ↩︎

- “Carlo Rovelli: ‘Time travel is just what we do every day…’”, The Guardian, 31 March 2019. ↩︎

- Possibly authored by the ‘heretic king’ Pharoah Akhenaten, “Great Hymn to the Aten”, c. 1350 BCE. ↩︎

- To hurt your head with large numbers, each grain of sand consists of approximately 2 x 1019 (20,000,000,000,000,000 000) atoms. ↩︎

- “The probability that the sand pile would blow away and land as a sand castle, the least likely random arrangement, is almost incalculably low. But not impossible. It’s just overwhelmingly more likely that the low entropy sand castle turns into a high entropy sand pile. This is why entropy is always increasing because it’s just more likely that it will” (Rich Mazzola, “What is entropy? An exploration of life, time, and immortality”, Medium, 9 May 2020). ↩︎

- The Order of Time, 2017. ↩︎

- On Bekenstein-Hawking entropy, see Wikipedia, “Black hole thermodynamics”. The Bekenstein-Hawking entropy formula shows that black hole entropy is proportional to the surface area of the event horizon. ↩︎

- The holographic principle suggests that all the information may be encoded in a smaller dimension on the event horizon; Wikipedia, “Holographic principle”. See also: Wikipedia, “Black hole information paradox”. ↩︎

- “River Man”, Five Leaves Left, 1969. ↩︎

- Potential because, as you will know, some water does not make it down, e.g., it stops in a tarn at the top. ↩︎

- Transition zones between the river and the sea are very useful places for life as all that energy must continue its flow and transformation. See, for example, Rocky Geyer, “Where the rivers meet the sea: the transition from salt to fresh water is turbulent, vulnerable, and incredibly bountiful”, Oceanus, 2005, 43(1). ↩︎

- Indeed, humans also tap some of this energy directly, e.g., with mills and hydro-electric installations. ↩︎

- The actual entropy number is proportional to a logarithm of the microstates. ↩︎

- “A Mathematical Theory of Communication”, 1948. ↩︎

- , where

is the probability of each outcome

in a set of possible outcomes

and is the base of the logarithm, which determines the units of entropy.

A common choice is with the unit being “bits”.

The negative sign outside the summation ensures that the entropy is a non-negative value. ↩︎ - Carlo Rovelli, “Agency in Physics”, https://arxiv.org/pdf/2007.05300, 2020. ↩︎

- “It is perhaps easier for us to see the increasing complexity and order of information structures on the earth than it is to notice the increase in chaos that comes with increasing entropy, since the entropy is radiated away from the earth into the night sky, then away to the cosmic microwave background sink of deep space … if the equilibration rate of the matter (the speed with which matter redistributes itself randomly among all the possible states) was slower than the rate of expansion, then the ‘negative entropy’ or ‘order’ … would also increase” (David Layzer, “Arrow of Time”, The Information Philosopher). ↩︎

- See, e.g., Carlo Rovelli, “Memory and information”, 2020. ↩︎

- The number of years in which it is expected that supermassive black holes will lose their energy due to Hawking radiation, after which space may no longer exist (as there may be no matter in it to differentiate between locations or structure the field for such interactions) and the universe may go back to a singularity and then a new Big Bang… or something else! ↩︎

Leave a comment