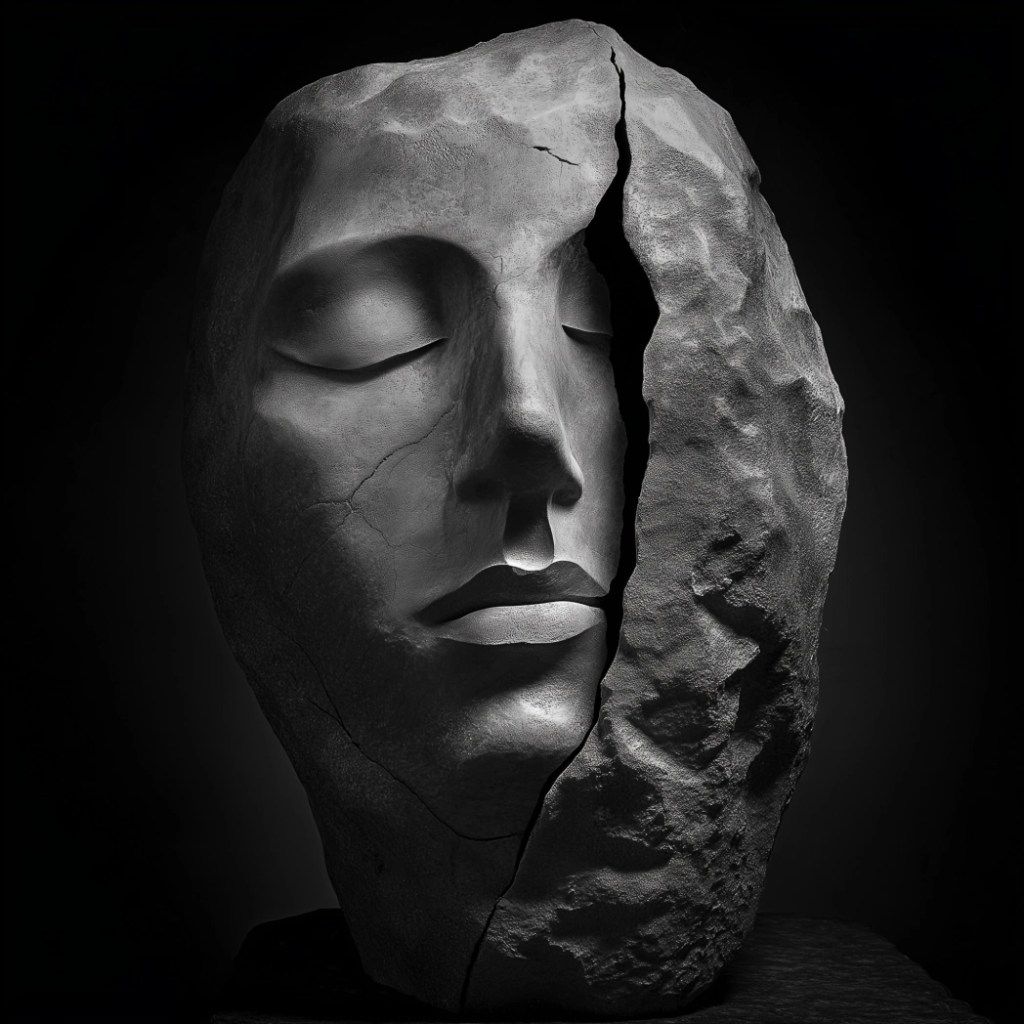

Experimental AI album

I also tried my hand at an AI generated album ‘The Silence Inside‘: a mixture of some of my poetry and instrumental music all using similar prompts to give the whole thing a (hopefully) coherent sound. Unfortunately AI can not yet produce studio quality tracks.

Listen With or Without Prejudice

Notes:

AI music generators (like Suno.ai) leave a lot of artefacts (including shimmering and hissing). This is due to the way they create music. The process involves the following stages:

- Prompt Interpretation (NLP): When a user enters a text description (e.g., “neo-classical piano with iceberg sounds”) or custom lyrics. Suno uses a language model to understand the creative vision, mood, genre, and specific instructions.

- Music Generation: The interpreted text is used to condition a generative audio model, using a combination of transformer-based and diffusion techniques.

- Data Training: The AI model is trained on a massive dataset of music, learning patterns, chord structures, melodies, rhythms, and how these elements correlate with different descriptions and tags.

- Audio Generation: The AI model generates the raw audio waveform from scratch each time effectively bringing sounds out of a sort of white noise. It essentially “learns” how instruments and vocals sound and should progress together.

- Vocal Synthesis: A specialised text-to-singing model is used to generate human-like vocals that align with the provided lyrics, timing, and style of the song. This model is adept at creating natural phrasing and emotional nuances.

- Post-Processing: The generated components (instrumentals and vocals) can then be mixed and “mastered” in applications like Bandlab (for amateurs like me) or with digital audio workstation (DAW) techniques.

Leave a comment